AI

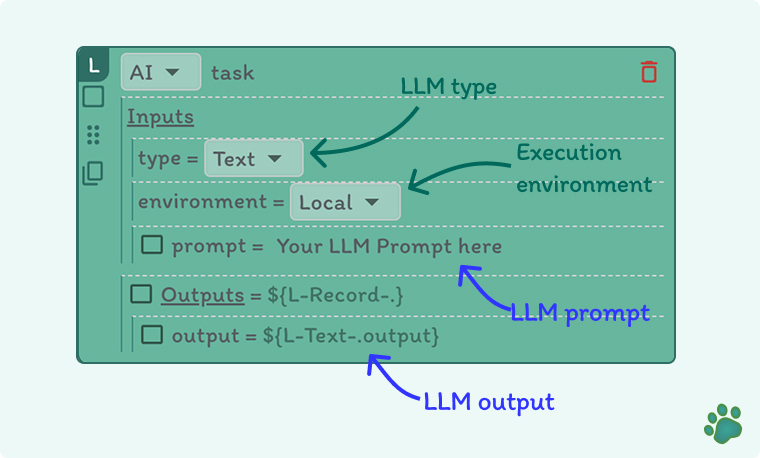

The AI step in Snappit allows you to call a Language Model (LLM) using a user-defined prompt and configuration. This step is ideal for generating dynamic outputs, extracting information, or performing reasoning tasks directly within a workflow.

🎯 Purpose

To invoke an LLM with a custom prompt and parameters, then return structured or unstructured output depending on the configuration.

🧭 Behavior

- Sends a prompt to an LLM based on the provided environment settings

- Accepts an execution environment which can be local or remote

- Supports two response types: raw text or structured JSON

- If using the JSON type, validates the response against the provided output schema

- Makes the LLM response available for use in later steps

⚙️ Configuration

- prompt: The text prompt to send to the LLM

- environment: Specifies where the model executes, either

localorremote - type: Defines the expected response format, either

textorjson - outputSchema (optional): A JSON schema used to validate the LLM response if the type is

json

✅ Use Cases

- Generating dynamic responses for customer communication

- Summarizing content or rephrasing inputs in workflows

- Extracting structured information using intelligent prompting

- Performing decision-making or branching logic based on LLM output

The AI step enhances your workflow with flexible, intelligent automation by integrating LLMs directly into the process.